XGBoost:

- XGBoost (Extreme Gradient Boosting) is a decision-tree-based ensemble machine learning algorithm that uses a gradient boosting framework.

- It is known for its speed and performance, making it a popular choice in many ML competitions and production systems.

- XGBoost can be used to solve both regression and classification problems.

- It is an implementation of gradient boosted decision trees, optimized for computational efficiency and scalability.

- Boosting is an ensemble technique where models are added sequentially, with each new model trying to correct the errors made by the previous ones.

- In gradient boosting, new models are trained to predict the residuals (errors) of prior models. These are then combined with the previous predictions to improve accuracy.

- It is called gradient boosting because it uses gradient descent to minimize a specified loss function as new models are added.

How XGBoost works?

Input Data (Train) > Initial Model (e.g., prediction) > Calculate Residuals (Errors) > Train New Tree on Residuals (Gradient Step) > Repeat Until Loss Stops Improving (Converges) >Final Ensemble Prediction

Comparison among Boosting, Gradient Boosting & XGBoosting:

Boosting

An ensemble method where models are added sequentially to correct errors.

Increases the influence of high-performing trees.

Gradient Boosting

Boosting using gradient descent to minimize loss.

Each model learns from the residuals of the previous one.

XGBoost

Optimized version of Gradient Boosting.

XGBoost enhances the basic Gradient Boosting framework through regularization, efficient handling of missing data, built-in cross-validation, customizable objectives, and system-level optimizations.

These improvements make it one of the most accurate, scalable, and widely used algorithms in applied machine learning today.

Why Does XGBoost Perform So Well?

Regularization

- XGBoost includes L1 (Lasso) and L2 (Ridge) regularization in its objective function.

- This is one of the most important features that helps prevent overfitting, which standard Gradient Boosting lacks.

- It improves generalization and controls model complexity.

Built-in Cross-Validation

- Unlike traditional models where we must manually perform cross-validation using sklearn, XGBoost provides an in-built cross-validation method (xgb.cv).

- This allows early stopping and performance monitoring directly during training.

- More efficient model tuning and selection.

Handling Missing Values

- XGBoost is natively designed to handle missing data.

- It learns the best direction to take at each split when a value is missing, rather than requiring imputation.

- This makes it highly robust on real-world messy data (e.g., customer or transaction records).

Flexibility in Objective Functions and Evaluation Metrics

XGBoost supports:

- Custom objective functions: classification, regression, ranking, etc.

- Custom evaluation metrics, giving you fine control over how your model is trained and validated.

System Optimizations in XGBoost

Parallelization

Unlike traditional GBM, which builds trees sequentially, XGBoost parallelizes the training process not across trees (which must be built sequentially) but within the construction of each tree.

- Normally, in tree-building, there’s a nested loop structure:

- Outer loop: enumerates all candidate split points (leaf nodes).

- Inner loop: evaluates split criteria for each feature.

- The inner loop is more computationally expensive and typically blocks parallelism.

- XGBoost optimizes this by switching the loop order:

- It performs a global pre-sorting of feature values across all instances using parallel threads.

- This allows simultaneous split-finding across features, significantly reducing runtime.

Tree Pruning (Depth-First with Max Depth)

- Traditional GBM often uses a greedy approach, where trees keep splitting as long as the loss is reduced.

- This can lead to deep, overfit trees, especially in noisy datasets.

XGBoost uses a “max_depth” parameter and a depth-first strategy:

It grows the tree to the full depth first.

- Then it applies backward pruning (also called post-pruning) based on a minimum loss reduction threshold (gamma).

Hardware Optimization

XGBoost is designed to maximize hardware utilization, especially for large datasets:

Cache awareness:

Each thread maintains its own memory buffer to store gradient and hessian statistics, improving cache locality and reducing memory access time.

Out-of-core computation:

For datasets that don’t fit in memory, XGBoost uses external memory (disk) efficiently.

It performs block compression and sharding, enabling scalable training without requiring huge RAM.

Pros of XGBoost

High Performance: Fast training via parallelization and regularization for high accuracy.

Handles Missing Data: Automatically learns the best direction for missing values.

Scalable: Works well with large datasets and supports out-of-core computation.

Cons of XGBoost

Complex Tuning: Requires careful hyperparameter tuning to avoid overfitting.

Memory Intensive: Can consume significant memory, especially on large datasets.

Slower on Small Datasets: May be overkill for simple or small problems.

Python Implementation for XGBoost

# Step 1: Import required libraries

from xgboost import XGBClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import classification_report, accuracy_score

import matplotlib.pyplot as plt

import seaborn as sns

from tensorflow.keras.datasets import fashion_mnist

# Step 2: Load the Fashion MNIST dataset

(X_train, y_train), (X_test, y_test) = fashion_mnist.load_data()

# Step 3: Preprocess data - Flatten 28x28 images and normalize

X_train_flat = X_train.reshape(-1, 28 * 28) / 255.0

X_test_flat = X_test.reshape(-1, 28 * 28) / 255.0

# Step 4: Initialize and train XGBoost Classifier

model_xgb = XGBClassifier()

model_xgb.fit(X_train_flat, y_train)

# Step 5: Make predictions

y_predictxgb = model_xgb.predict(X_test_flat)

# Step 6: Evaluate performance

accuracy = accuracy_score(y_test, y_predictxgb)

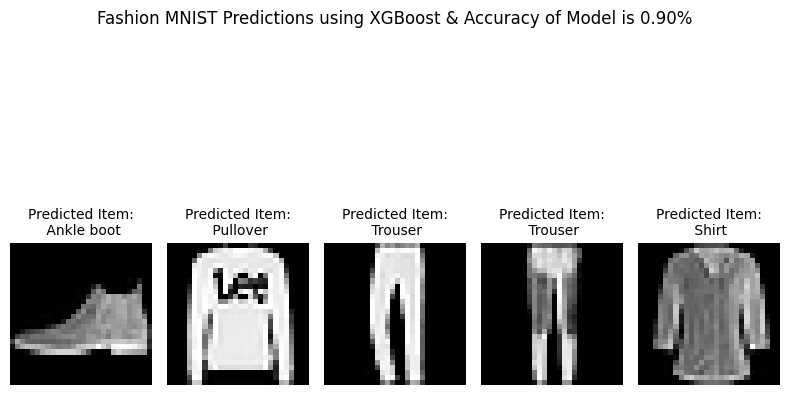

# Step 7: Visualize a few test predictions

fashion_labels = ['T-shirt/top', 'Trouser', 'Pullover', 'Dress', 'Coat',

'Sandal', 'Shirt', 'Sneaker', 'Bag', 'Ankle boot']

plt.figure(figsize=(8, 6))

for i in range(5):

plt.subplot(1, 5, i+1)

plt.imshow(X_test[i], cmap='gray')

plt.title(f"Predicted:\n{fashion_labels[int(y_predictxgb[i])]}",

fontsize=10)

plt.axis('off')

plt.suptitle(f"Fashion MNIST Predictions (XGBClassifier)\nAccuracy: {accuracy:.2%}")

plt.tight_layout()

plt.show()